A story by Barry Cipra in [the Mar. 25, 1988] issue of Science draws attention to a looming “crisis in mathematics.” The crisis has to do with the teaching of calculus, the branch of mathematics that has long been the cornerstone of education in science and engineering.

According to Cipra, students are coming to college calculus with poor backgrounds in algebra, geometry, and trig. Moreover, students do not understand why they must learn calculus when most of the relevant problems can be solved more expeditiously with sophisticated new hand-held calculators or computers.

I remember with delight my own undergraduate experience with calculus, back in the days when the most sophisticated computational device available to a student was a slide rule. I was dazzled by the beauty of the subject, impressed by its power to solve problems, overwhelmed by its philosophical ingenuity.

So enthralled was I by calculus that when I married I immediately set out to remedy what I perceived to be the most glaring gap in my spouse’s education. No one, I thought, could be truly happy without knowing calculus. What followed is best left unwritten. The marriage somehow survived my foolishness, and my spouse has managed to live a full and happy life without the calculus.

Mozart and the Brooklyn Bridge

I still think the calculus is one of the grandest achievements of the human mind, right up there with things like Mozart’s Requiem, Jefferson’s Declaration, and Roebling’s Brooklyn Bridge. But looking back, I realize that I seldom used calculus in my career. In my teaching, yes, but not in research or in everyday life. And I would guess that my experience is typical of the majority of students who have successfully traversed the casualty-strewn minefield of Calculus 101 – 102.

So why does calculus loom so large in the education of scientists and engineers? The answer, of course, is that since the 17th century the laws of nature as we understand them have been expressed in the language of calculus.

The calculus was invented by Newton and Leibniz precisely to treat problems of motion and change that arose with the invention of modern science. There was a close correspondence between the new mathematics and a new metaphor that 17th century scientists used to describe the world. In the view of Newton’s contemporaries, the world is a machine, a sort of elaborate mechanical clock, set going by the Great Clockmaker. The machine runs smoothly in continuous space and time, and calculus is the language that best describes continuous change.

But wait! Is it possible that a new metaphor for describing the universe is emerging in our own time? Is there something in our lives — now, in the late 20th century — more impressive to us than were mechanical clocks to the contemporaries of Newton? And who is this man, featured on the cover of [the Apr. 1988] issue of The Atlantic, who says that the universe is not a clock but a computer, contrived and programmed by the Great Programmer?

Pure digital data

The man is Edward Fredkin, an iconoclastic computer scientist who for a time was associated with the MIT Laboratory for Computer Science, and he is serious. Fredkin imagines a universe that is not made of matter and energy distributed in continuous space and time, but of elaborate patterns of discrete bits, pure digital information — ones and zeros, if you like — ticking away, and changing according to a programmed rule, like bits stored in the memory of a computer. There is a new mathematics behind these patterns of changing bits. It is called the theory of cellular automata, and many physicists, at MIT and elsewhere, are exploring its potential.

The cellular-automata theorists have had a few successes, but they have yet to find digital “laws of nature” that describe the world with anything remotely resembling the success of conventional physics, and until they do Edward Fredkin’s extravagant views must be considered as wild, even theological, speculation. For the time being, calculus remains the premier language of science.

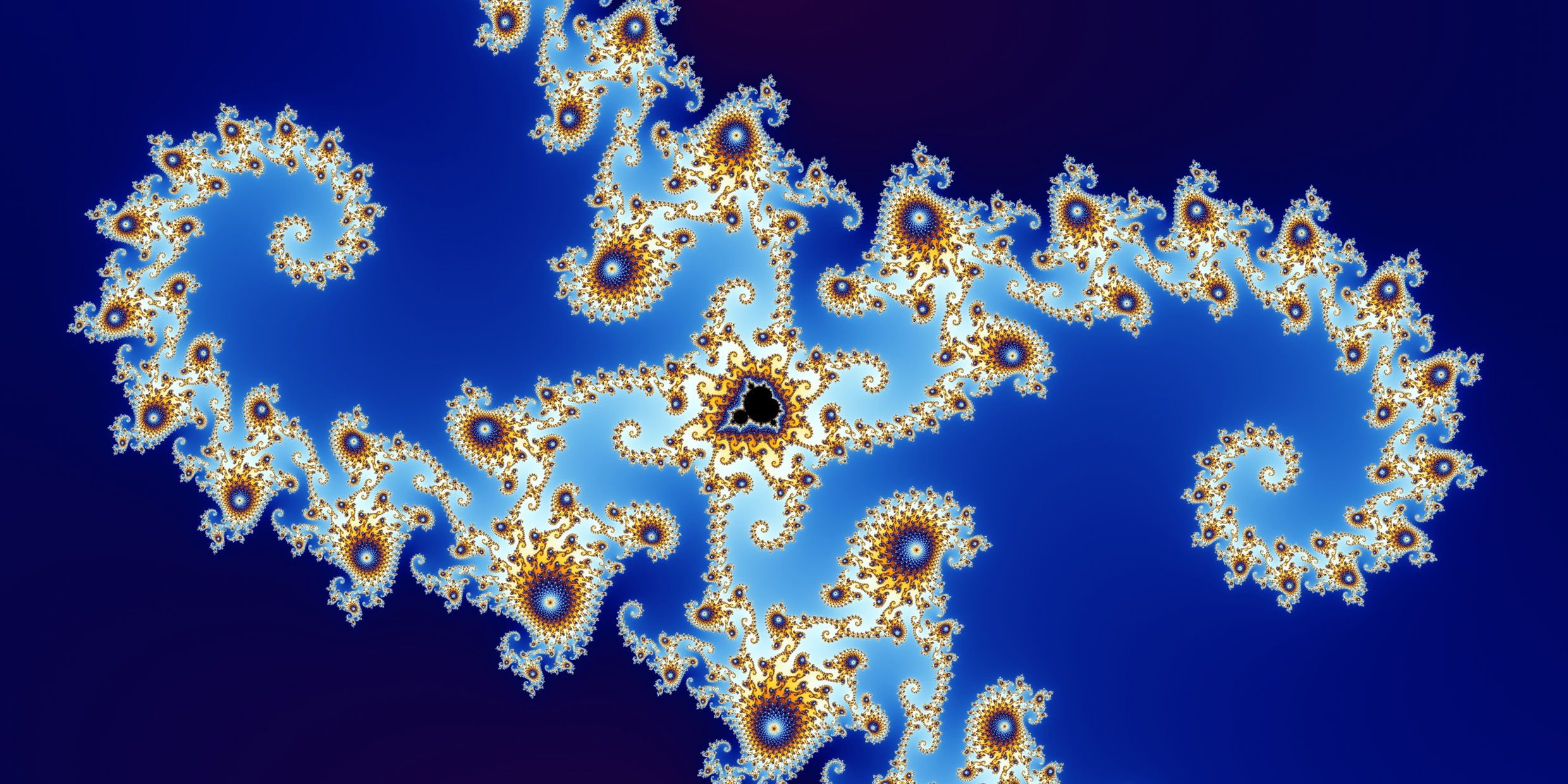

But I wouldn’t sell short the idea of “the world as a computer.” Computers have dramatically changed the way science is done, and cellular automata — those little universes of flickering bits in the memories of computers — have delightfully mimicked certain aspects of the real world. So have fractal geometries, another rapidly emerging field of computer-based mathematics. And there is certainly more to come.

The more significant computers become in our lives, the more likely they are to dominate our imaginations. The computer is a provocative metaphor, and a good metaphor is a powerful stimulus to creativity.

Today’s undergraduate scientists and engineers need calculus if they are to understand the way the world works. But the day may come when the calculus of Newton and Leibniz will go the way of slide rules and mechanical clocks. When everything from timekeepers to music has been “digitized,” can physics be far behind? Maybe somewhere, right now, a foolish young physicist, hooked on the theory of cellular automata, is trying to inspire a reluctant spouse to learn the new mathematics of permutating bits.