Originally published 14 September 1998

Are you ready for teraflops? Picoseconds?

Wait! Before we get to tera and pico, let’s pause to remember mega and micro.

It seems like only yesterday that mega and micro were all the rage, whisking us to new limits of technology.

Mega is the metric prefix for “million.” A typical home computer has megabytes of built-in memory; that is, it can store millions of alphabetic or numeric characters.

Micro is the prefix for one-millionth. Computers do calculations in millionths of a second.

Computer pioneers like Steve Jobs reaped megabucks selling us machines that did megajobs in microseconds.

How quickly things change. Today, mega and micro seem quaintly old hat, and giga and nano preside. Even bottom-of-the-line computers these days have hard drives with gigabytes of memory.

Giga is the metric prefix for “billion” (a thousand million).

Bill Gates plumps his gigabuck fortune selling programs that require gigabytes of hard-disk memory.

The calculation speeds of the fastest computers are described in nanoseconds, or billionths of a second, and engineers are talking about using computer-chip technology to build robotic machines that are only nanometers big. Nano is a thousand times smaller than micro.

Young technogeeks now babble about giga and nano the way we oldsters once talked about kilo and milli.

And even before we manage to get comfortable with giga and nano, along come tera and pico.

Teraflop is the hot new word.

Tera is the prefix for trillion. Flop is short for “floating-point operation,” a kind of computer calculation performed with a movable decimal point. A teraflop computer can do a trillion floating-point operations per second — a thousand gigaflops, a thousand thousand megaflops.

That averages out to a calculation every picosecond, or trillionth of a second.

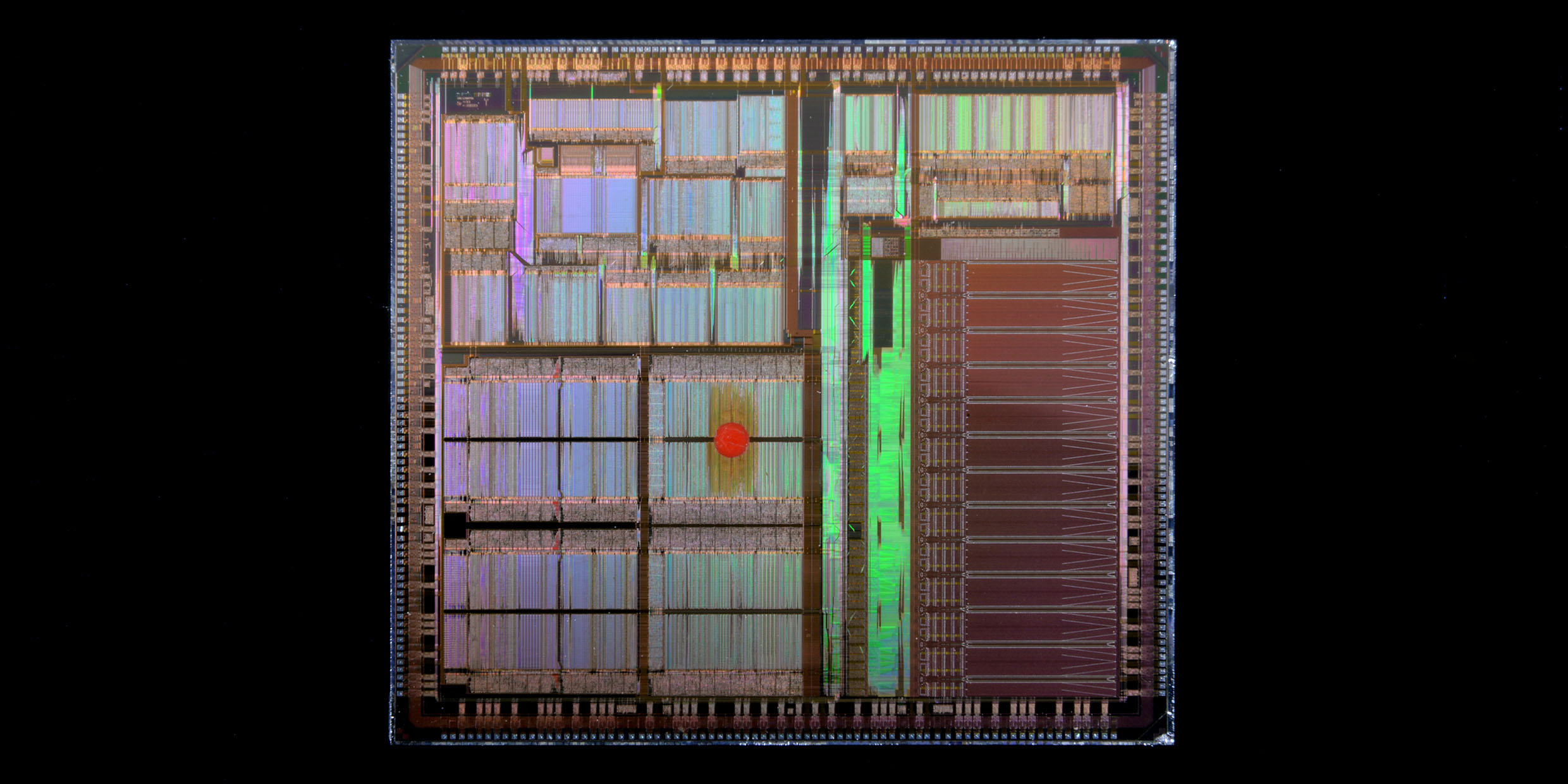

Japanese engineers are designing a 32-teraflop machine that will consist of thousands of megaflop processors linked in parallel, all working at once and exchanging information with one another.

With such a machine, they hope to build a “virtual Earth,” a mathematical model of the Earth’s oceans and atmosphere that will provide more precise predictions of phenomena such as global warming, air and water pollution, and El Niño weather.

Of course, this will not be the first digital simulation of the Earth. The National Center for Atmospheric Research in Colorado and the UK Meteorological Office in Britain, among others, have long been using sophisticated computer models of the Earth’s dynamic systems.

But 32 teraflops will bring us closer than ever to putting the real Earth in a box.

Meanwhile, other scientists are trying to get the entire universe into a box. A multinational consortium of astrophysicists and computer scientists have programmed a supercomputer at the Max Planck Society’s computing center in Garching, Germany, to simulate the evolution of the early universe.

The team is especially interested in discovering if slight density variations in the matter of the early universe, caused by “quantum fluctuations” in the Big Bang, might have amplified to become the kinds of galactic clusters we observe today.

In this new simulation, 10 galaxies are represented by a single dot of data. Of course, this is a huge simplification of the real universe — trillions of stars and planets reduced to a dot! Nevertheless, the program mimics the entire visible universe of galaxies interacting over 10 billion years of simulated time.

Each run of the simulation requires about 70 hours of calculation and generates nearly a terabyte of data showing how galaxies were distributed at various moments of cosmic time.

The computer modelers try various theoretical scenarios for what the universe was like in the earliest days, and see which sort of beginning leads to a mature universe that most closely matches the one we observe with our telescopes.

Historical sciences, such as cosmology or evolution, do not lend themselves to experimental testing of the usual sort; after all, we can’t do experiments with the entire universe in the laboratory, nor do we have billions of real years to perform experiments on evolution. But we can build mathematical simulations of cosmic space and geologic time that tell us if our theories reasonably account for the world we observe.

Will scientists ever build a universe-in-a-box that contains stars, planets, life, and mind, all interacting over simulated billions of years of time? For even the first steps in that direction, even teraflop machines will not be enough. We must await the arrival of petaflop computers (a thousand teraflops).

Considering how fast we progressed from kilo to mega to giga to tera, can peta be far behind?